.png)

Transform raw, unstructured information into clean, structured datasets ready for AI analysis and automation.

Automate the extraction, transformation, and loading of data from multiple sources — ensuring consistency, accuracy, and scalability.

Enhance LLM performance with Retrieval-Augmented Generation, combining real-time data access with contextual reasoning.

Deploy AI solutions that keep your data private and secure through confidential computing and end-to-end encryption.

Automatically clean, classify, and structure your datasets for optimal model LLM performance.

Identify the language of a given fact, so you can easily manage which annotator can assess the quality of your fact.

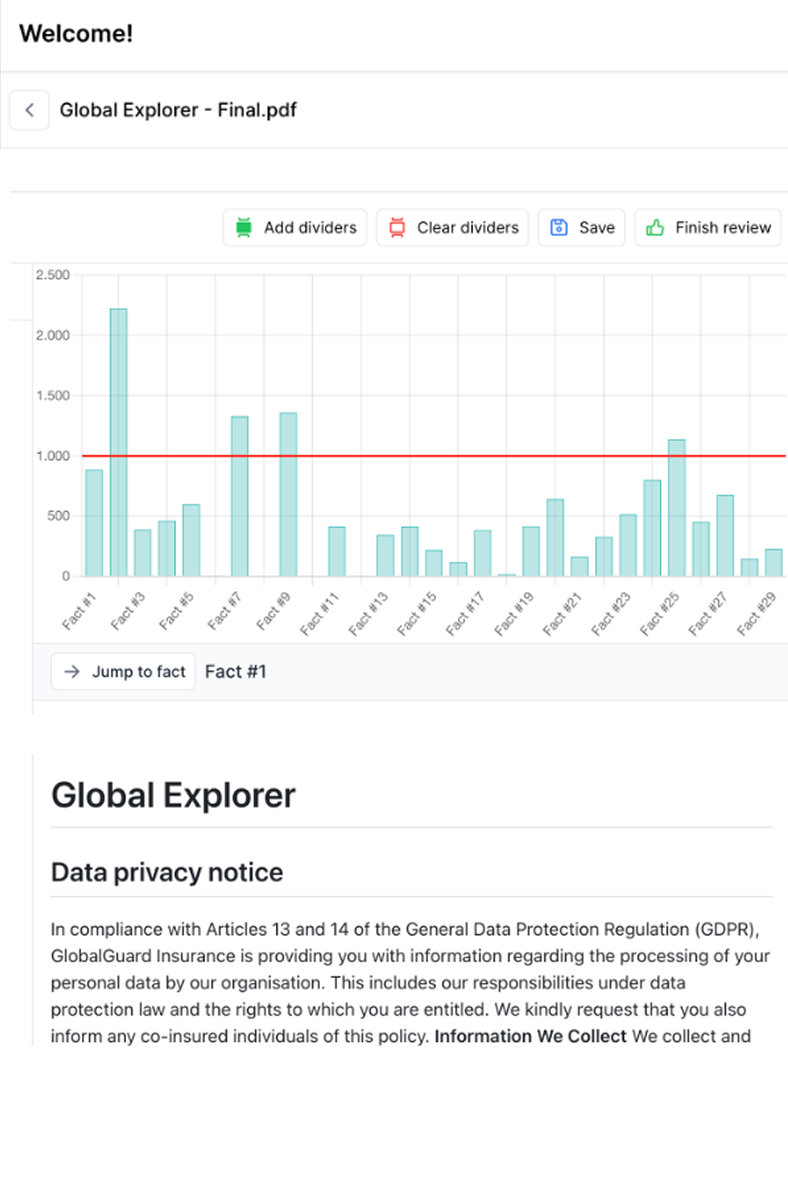

Assess the reliability and accuracy of a given fact. This AI block helps in prioritizing high-quality information and identifying potential inaccuracies or biases.

Scan and identify personally identifiable information (PII) within a dataset or text. This AI block is helpful for ensuring data privacy compliance and safeguarding sensitive user information.

Evaluate the intricacy and depth of a given fact to determine its level of complexity. Useful to decide which LLM to use if this fact is included.

Evaluate the frequency at which a particular fact is accessed or retrieved from a database. This AI block assists in identifying trending or popular data points, optimizing caching strategies, and ensuring efficient data retrieval.

Detect and isolate date and time details from a given text or dataset. Facilitates temporal analysis, event tracking, and data organization based on time-related parameters.

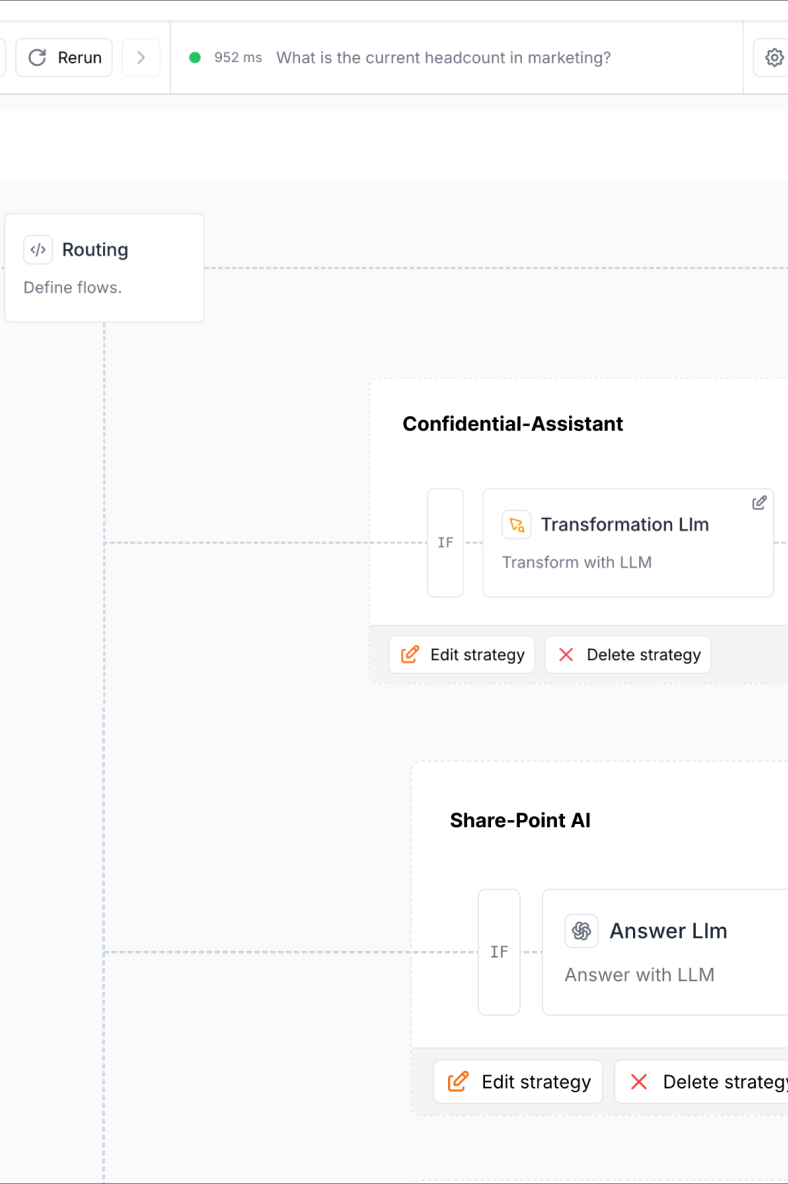

Create custom LLM applications that automate workflows, enhance decision-making, and keep full control of your data.

Identify the language of a given fact, so you can easily manage which annotator can assess the quality of your fact.

Handles multiple data types, from documents, data sources, websites to emails.

AI-powered insights to gauge and enhance your data quality. Do not poison your LLM with false data.

Vector search alone is not enough. You need to correctly model your data to get the best results. We help you to do that.

Automatically label your data with our LLM-powered labeling tool, or let annotators do the work for you.

You can easily configure new chunks and embedding models to create embeddings for your vector database.

Experience confidential AI that protects your data at every stage — from prompt to processing — with the full power of modern language models.

Built for organizations handling sensitive data. Privatemode enables GDPR-compliant, enterprise-grade AI adoption without the risk of shadow AI.

Run leading open-source models like LLaMA 3.3 or DeepSeek R1 securely, with flexibility to adapt to your own infrastructure or future models.

It runs in secure hardware enclaves (AMD SEV-SNP, Intel TDX) and protects your data from unauthorized access.

No prompts, responses, or metadata are stored or reused. Once a session ends, your data disappears — permanently.

Use Privatemode as a browser chat app or integrate its secure AI API into your own products — bringing privacy-first AI to your workflows.

Your data is encrypted before an LLM has access to it and stays encrypted even during AI processing. No one — not even the provider — can access it.

.png)

To understand how to make LLMs more reliable and trustworthy, we first need to understand why they fail.

If information about different topics is stored too closely together, the AI may retrieve the wrong dataset. This mix-up leads to confusing or incorrect responses.

Your AI model will identify 2 out of 5 relevant documents to answer your question, leading to incomplete answers (e.g. answering via the base tariff when there is also a special tariff).

Your AI model will answer your question, but you will not know why it answered the way it did.

A lot of your data is in unstructured documents, which are not machine-readable. This leads to errors in your data, and thus leading to wrong answers.

Piece by piece, we solve the above problems to make your AI trustworthy.

With our approach, the underlying data sources are first modeled in a mindmap-like structure to highlight the relationships between the data.

Given the filtered data, we use a language model to quote the data in a way that is understandable to the user and can be validated.

We use a data quality AI to ensure that the data is up-to-date and correct - always.

We apply customizable intent AI and psychology AI to infer what the user is looking for and filter the data accordingly via the mindmap-like structure.